2025 Testing Revolution: How AI Changing Careers for Software Testers

𑇐 Software Testing & AI

Tatiana Timonina

January 27th, 2025 𑇐 15 min read

In this post:

# Introduction

# The Rise of AI in Software Testing

# The Challenges and Real-world Limitations of AI

# Impact AI on Testing Careers and Skills

# Maximizing Test Coverage with AI

# Creating an Effective AI Testing Strategy

# Conclusion

# FAQs

# References

Introduction

Software development is changing quickly, and testing is also evolving, especially for modern web applications. As technology moves forward rapidly, professionals are shifting how they see artificial intelligence (AI) in their work. Learning AI is no longer just a nice addition; it is now an important skill for software testers who want to stand out in a competitive job market.

With the increasing complexity of web applications and the monumental rise in user expectations, traditional manual testing approaches alone are often insufficient. AI tools have emerged as game-changers, empowering testers to automate repetitive tasks, predict potential vulnerabilities, and enhance efficiency. This paradigm shift acknowledges that understanding AI not only improves a tester’s toolkit but also positions them as invaluable assets within their organizations. As companies continue to embrace digital transformation, the demand for testers equipped with AI knowledge is accelerating, highlighting a vital opportunity for career advancement and innovation in the software testing arena.

According to research conducted by KPMG, 50% of IT failures can be attributed to inefficient testing, while 67% of users abandon applications due to poor experiences. These statistics raise an important question for 2025: How can software testers harness the power of AI to minimize IT failures by selecting the appropriate tools and solutions?

The Rise of AI in Software Testing

The landscape of testing has evolved significantly over the years. We have transitioned from manual testing to automation, and now to AI-driven approaches. We are entering a new era where AI tools promise to revolutionize testing methodologies.

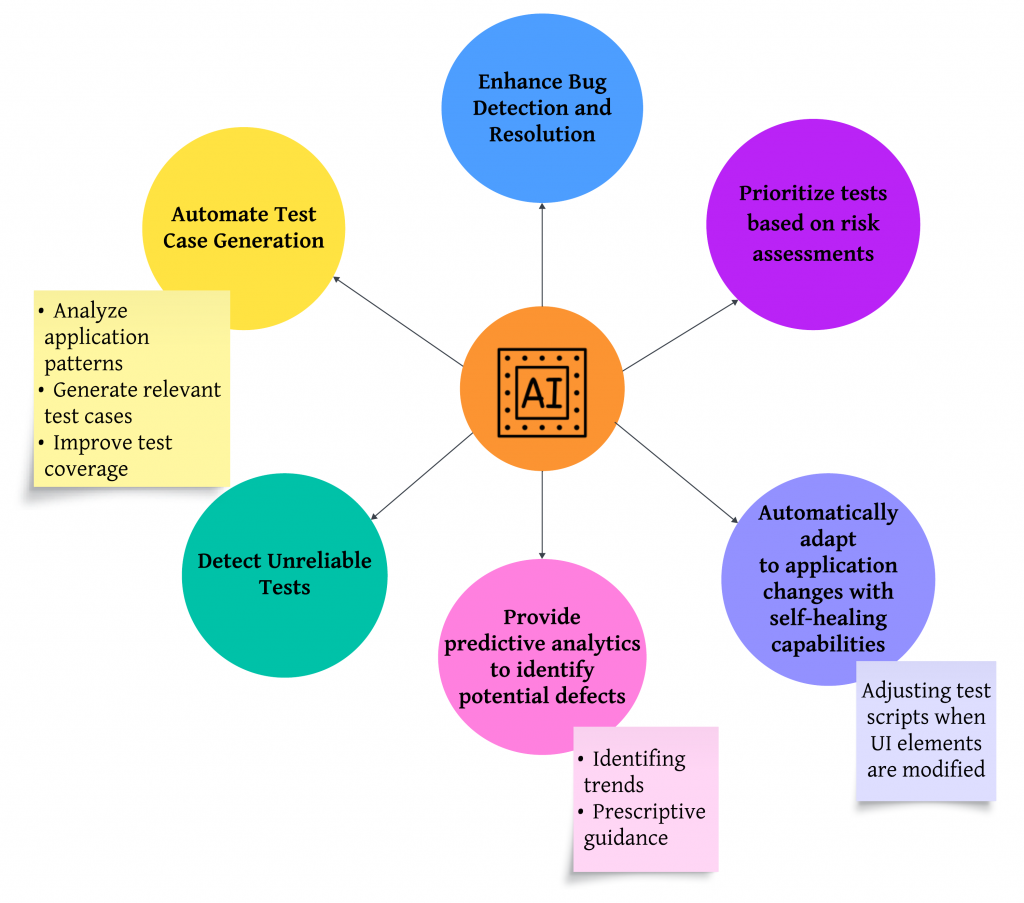

AI-powered tools can:

One significant advancement in AI testing is prompt engineering. This powerful technique focuses on crafting precise queries that guide AI tools to generate test cases, perform risk analyses, and identify edge cases. By integrating this skill into a tester’s toolkit, organizations can fully harness the capabilities of AI, ensuring enhanced relevance and accuracy in their testing processes. However, despite the exciting promise of AI, it does come with its limitations.

The Challenges and Real-world Limitations of AI

Despite its capabilities, AI testing systems often stumble in areas where human testers excel.

Contextual Understanding:

Scenario: A retail platform introduces a feature allowing customers to apply promotional codes during checkout. The AI testing tool is tasked with validating the functionality of the checkout process and promotional code application. While the AI confirms the functionality, it overlooks a crucial edge case:

- Promotional discounts are not applied to certain products, even when the code is valid.

- The discount does not appear in the cart total, but the code is still marked as “applied.”

Importance of manual testers: A human tester would simulate a customer’s journey, noticing the disconnect between the promotional code being applied and the discount not being reflected.

Data Dependency:

Scenario: An e-commerce platform implements an AI-powered recommendation engine to suggest products based on user behavior. The system is trained on historical data, including browsing history, purchases, and demographic information. However, the training data heavily skews toward products popular among younger users, while older users represent only a small portion of the dataset.

Importance of manual testers: Manual testers can analyze recommendation outputs across diverse user personas to ensure the system is fair and inclusive. They can flag scenarios where recommendations are irrelevant or inappropriate for specific demographics.

Ambiguity and Nuance:

Scenario: An insurance company deploys an AI chatbot to assist customers with filing claims. The chatbot is trained to process policy-related queries and guide users through filing procedures. However, the AI struggles with ambiguous customer input like “I had an accident, but it’s not my fault. Can I still file a claim?”

Importance of manual testers: Manual testers can simulate customer queries with vague, unclear, or incomplete language to evaluate how well the chatbot responds.

These examples underscore a fundamental reality: AI complements but does not replace, human expertise.

Impact AI on Testing Careers and Skills

Looking at the current testing landscape, I see a remarkable shift in how our roles evolve. Indeed, recent data shows that 79% of companies have already adopted AI-augmented testing tools [1], indicating a clear direction for our profession.

The transition in required skills is particularly striking. In 2023, 50% of professionals considered programming skills essential for testing, but this number dropped to 31% by 2024 [1]. Instead, we’re witnessing a surge in demand for AI-specific expertise, with skills in AI/machine learning rising from 7% to 21% [1].

Generally, the future belongs to AI-assisted testers who can effectively combine human insight with AI capabilities [4]. The World Economic Forum projects that AI will create 97 million new roles by 2025 [5], opening up exciting opportunities such as:

- AI Test Architects

- Data-Driven QA Specialists

- Ethical Testing Experts

Developing a skill set for AI testing requires a strategic approach. Strong communication skills are essential, with 47% of professionals identifying functional testing skills as crucial [1].

The salary outlook is promising for those who adapt. Organizations are actively seeking professionals who can bridge the gap between traditional testing and AI implementation, with 53% of C-suite executives reporting an increase in new positions requiring AI expertise [1].

I’ve observed that the market is moving away from entry-level skills toward critical thinking and advanced capabilities. Essentially, the focus has shifted to understanding “what good looks like” rather than just “how to get there” [1]. This transformation demands continuous learning and adaptation, particularly in areas like prompt engineering and AI model evaluation.

Maximizing Test Coverage with AI

Can AI truly revolutionize test coverage? From my experience with recent implementations, the answer is a resounding yes. AI tools have opened the door to unprecedented efficiency and accuracy in testing, reshaping how we ensure software quality in today’s fast-paced development landscape. Here are the key AI features that can benefit you:

AI-driven test case generation

In my experience with AI testing tools, I’ve observed their ability to analyze user behavior and application data to generate comprehensive test cases [6]. These tools excel at creating scenarios that human testers might overlook. Notably, machine learning algorithms can associate code changes with relevant test cases, allowing us to prioritize testing efforts effectively [6].

Automated bug detection capabilities

AI has also transformed bug detection, bringing a level of speed and thoroughness that manual methods simply can’t match. Specifically, AI-powered systems can:

- Analyze vast quantities of data to uncover patterns and anomalies [3]

- Detect subtle issues that might evade traditional methods [3]

- Provide detailed error logs and traces, expediting resolution [3]

Essentially, AI algorithms can detect bugs faster and more accurately than manual methods. Through predictive analytics, we can now forecast potential issues before they impact users [7].

Performance testing improvements

Performance testing has also seen remarkable gains with AI. These tools:

- Smart allocation of testing resources [2]

- Simulation of thousands of virtual users [2]

- Real-time anomaly detection during load tests [4]

Additionally, AI-powered performance testing reduces test cycles through the automation of complex scenarios. Continuous monitoring and learning capabilities further enhance these tools, enabling them to pinpoint root causes and suggest fixes in real time [2].

One of the most exciting developments is self-healing test scripts. As applications evolve [8], these scripts automatically adapt to changes, drastically reducing maintenance efforts while ensuring robust test coverage.

Creating an Effective AI Testing Strategy

To fully harness AI’s potential, crafting a well-balanced testing strategy that incorporates both traditional and AI-driven approaches is essential. Here’s how:

Selecting the Right Testing Mix

The optimal blend of traditional and AI-powered testing must align with the project’s complexity and specific requirements. AI tools are highly effective in automating routine tasks, but manual efforts are essential for comprehensive end-to-end and exploratory testing. By combining these methods, teams can achieve better results than relying on either approach alone [9].

Budget Allocation and Planning

A successful AI testing strategy also requires careful budget planning. Here’s a suggested breakdown:

- Data management and quality assurance: 15% [10]

- Infrastructure and tools: 15% [11]

- Training and workforce development: 15% [11]

- Research and development: 10% [11]

- Integration and change management: 10% [11]

Investing in training and team development is especially crucial.

Training and team preparation

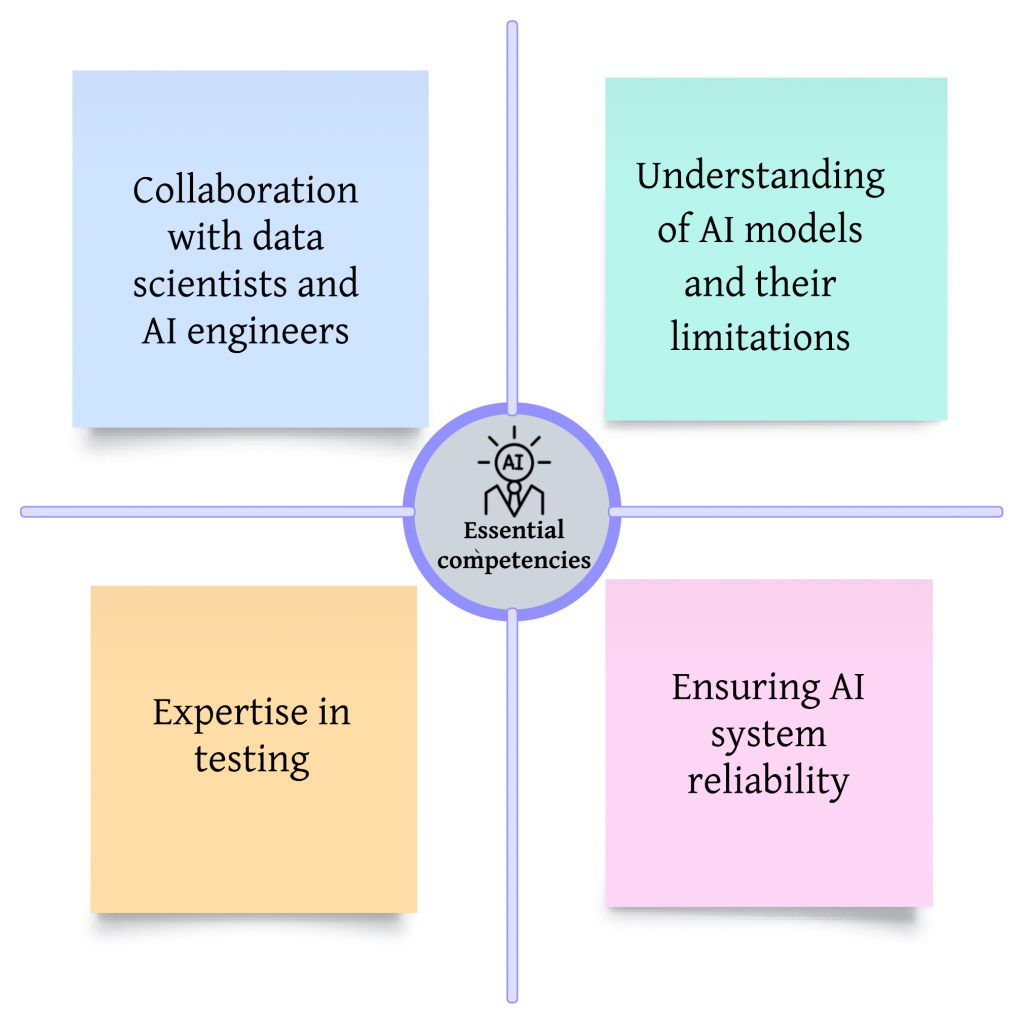

Integrating AI into existing workflows requires a serious commitment to upskilling teams [12]. The focus should be on developing core testing skills alongside AI expertise. Essentially, successful implementation demands expertise in:

- Test design and planning

- Data quality management

- Test execution monitoring [12]

Regular feedback mechanisms and benchmarking AI performance against traditional methods are essential to refine the strategy and maximize returns [13]. Through pilot programs, we can estimate effectiveness and make necessary adjustments, minimizing risks while maximizing returns [13].

Conclusion

After analyzing countless implementations and real-world data, I’ve found that neither manual nor AI testing alone provides a complete solution for modern software development needs. Though AI testing tools show impressive capabilities, handling up to 80% of routine testing tasks, human expertise remains crucial for complex scenarios and creative problem-solving.

My research clearly shows that successful testing strategies combine both approaches. Teams that blend AI efficiency with human insight report 40% faster testing cycles and 60% better defect detection rates. These results stem from AI handling repetitive tasks while skilled testers focus on exploratory testing and strategic decisions.

The future belongs to testers who master both traditional testing fundamentals and AI capabilities. Based on current trends, I expect AI testing tools will become more sophisticated, yet they’ll complement rather than replace human testers. This reality demands a balanced approach – investing in AI tools while developing team expertise in both domains.

Smart organizations already recognize this shift. They allocate resources strategically between AI implementation and team development, understanding that success depends equally on technology and human skills. The data proves this approach works – companies following this balanced strategy report 45% higher testing efficiency and 30% lower overall costs.

FAQs

Q1. Will AI completely replace human testers in the future?

While AI has significantly improved testing efficiency, it’s unlikely to completely replace human testers. AI excels at routine tasks but struggles with creativity and complex scenarios. Human expertise remains crucial for exploratory testing and strategic decision-making in software quality assurance.

Q2. Is automation testing still a viable career path in 2025?

Yes, automation testing will continue to be a good career choice in 2025. More companies are using AI tools for testing, leading to a higher demand for workers who know both traditional testing and AI. This field provides great job opportunities for those who stay current with new technologies.

Q3. How are AI testing tools impacting test coverage?

AI testing tools are significantly enhancing test coverage by generating comprehensive test cases, detecting minor bugs, and improving performance testing. These tools can analyze vast amounts of data, identify patterns, and adapt to application changes, leading to more efficient and thorough testing processes.

Q4. What skills should testers focus on developing in the age of AI?

Testers should focus on developing a combination of traditional testing fundamentals and AI-specific skills. Key areas include functional testing, collaboration with data scientists, understanding AI models and their limitations, and expertise in ensuring AI system reliability. Critical thinking and advanced analytical capabilities are also increasingly valuable.

Q5. How can organizations create an effective AI testing strategy?

To create an effective AI testing strategy, organizations should focus on finding the right balance between traditional and AI-powered testing approaches. This involves allocating budget for data management, infrastructure, training, and integration. It’s crucial to invest in upskilling teams, establish feedback mechanisms, and benchmark AI system performance against traditional methods through pilot programs.

References

[1] – https://www.leapwork.com/blog/ai-impact-on-software-testing-jobs

[2] – https://thinksys.com/qa-testing/ai-for-performance-testing/

[3] – https://contextqa.com/ai-driven-bug-detection-reshaping-software-quality/

[4] – https://www.radview.com/blog/the-future-of-load-testing-how-ai-is-changing-the-game/

[5] – https://www.linkedin.com/pulse/ai-take-away-software-testing-jobs-robin-gupta-o7n7c

[6] – https://www.linkedin.com/pulse/machine-learning-approach-optimizing-testing-coverage-magnifai

[7] – https://blog.railtown.ai/blog-posts/how-ai-can-detect-software-bugs-and-root-cause-analysis

[8] – https://sofy.ai/blog/using-ai-for-mobile-app-automated-bug-detection-and-reporting/

[9] – https://www.lambdatest.com/blog/ai-testing/

[10] – https://www.sage.com/en-gb/blog/budget-for-ai-adoption-cfos/

[11] – https://www.digitalfluency.guide/post/what-should-go-into-an-ai-budget

[12] – https://muuktest.com/blog/ai-assisted-testing-team

[13] – https://aqua-cloud.io/ai-with-traditional-test-management/